The virtualization buzz

I have recently worked on network virtualization. Many people, especially the network guys, have been recently excited with the VMware Vswitch or Cisco Nexus stuff. It is something that I understand because virtualization is cool. It brings many convenient features that truly make the life easier.

But what about the security? Convenience and security rarely come together, right? Oh, wait… we are in 2011, so lessons must have been learned. After all, Mr Salesman swear that it is more secure than ever. Convenience and security packed together, he says… it sounds promising. Let’s dig a little to find out what they won’t tell you…

I will focus on what really changes with virtualization : the architecture. One of the main goals of the technology is to reduce the number of physical devices to cut the costs, save space and energy. Of course, it goes with a simplification of the physical architecture. Therefore, some features previously handled by dedicated physical devices are now handled logically by a unique piece of hardware.

This obviously goes against the security best practices about designing network architectures with various degrees of exposure. But has the technology evolved so much that we should reconsider these recommendations?

VMware Vswitches or Nexus 1000V

These technologies are similar in the sense that they are designed to work directly inside the VMware platform. Vswitches are integrated with the solution of VMware, while Nexus benefits from the experience of Cisco and bring more layer 2 control (more settings, more protocols).

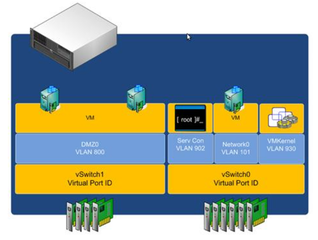

As well on the architecture documents of VMware as within the administration interface of Vcenter, it appears so easy to create segregated switches and build this way in a few clicks a DMZ architecture:

But it is slightly different in reality, as Brad Hedlund from Cisco shows in an interesting article: the vswitch illusion and DMZ virtualization. In short, whether you are using VMware Vswitches or Nexus 1000V, a single threaded program runs all the configured virtual switches. In clear, all the virtual switches share the same memory space. So, any vulnerability in the code would compromise all the switches, in other words: the entire network. And, not a surprise here, there have been many vulnerabilities. Just browse a CVE database if you want to check.

So you don’t want to rely on such a design for your datacenter, right?

Nexus 7000

In the case of the Nexus 7000, it is a little bit different because most of the switching work is handled by specific hardware, which have a much smaller attack surface than the vswitches stuff. But is it really safe?

The Nexus family is quite new and from what I could witness, they are quite pushy selling that. Because it is new, there is still neither much info surrounding the technologies used, nor user feedback, nor security research. Anyway, below is a quick sum-up of what I could find.

A few words about the architecture

In a layer 3 Nexus architecture, Nexus 2000, 5000 and 7000 are designed to work together. Nexus 2000 are basically top-of-the-rack port panels, with no intelligence. Nexus 5000 takes care of most of the layer 2 switching, while Nexus 7000 adds layer 2 functionalities and layer 3 support. Nexus 2000 and 5000 can work without the 7000, but in that case there is not so much difference with a classic layer 2 switch in terms of security (but it has the advantage to be more flexible to integrate in a datacenter). This and this may help you to visualize the differences.

So we will focus on the Nexus 7000 architecture, which bring VDC as a way to handle DMZ architectures. VDC are somehow similar to VLANs. But whereas VLANs virtualized LANs on a switch, VDC virtualize switches. So, on the same Nexus 5000 device, VDC will add the capacity to have multiple virtual switches which are in theory properly isolated.

This is a very basic sum-up for what we are interested in, but if you want to learn more, I encourage you to read the Cisco whitepaper about VDCs.

The flaws

Now that the presentations are made, the downside…

George Hedfors is the only researcher that worked notably on this platform, as far as I am aware. He made some really great findings, that you can discover within his slides.

At the time of his work – 2010, it appeared that the NX-OS consisted of a Linux Kernel 2.6.10 (released in 2004!). We can imagine that the OS has been signifiantly customized and hardened by Cisco. They may have include NX-bit support (included since 2.6.8 and later improved). However, there is probably no ALSR support (2.6.12), no MAC system (SELinux or Tomoyo). Of course, I may be wrong but I haven’t found any documentation about that and my Cisco contact did not provide me with any consistent detail.

Anyway, he found a bunch of design flaws:

- Poor CLI design: there are 686 hidden commands (system, debugging) that can be launched as root (sudo without password). One of these command is gdb, which can start a network daemon as root. The attacker can then connect to the socket to attach to any process on the system to elevate his privileges. Of course, it requires some shell access, so the exposure is limited. However, it is very instructive of how the system was designed!

- Insecure daemon configuration: Daemon are not chrooted and run with the root user.

- Embarassing CDP vulnerability : a vulnerability from 2001 was reintroduced in the code handling CDP. So it is possible to crash a daemon running as root. What if another vulnerability on a layer 2 daemon (vtp, hsrp, stp…) was discovered and allowed to rewrite the stack? Game over, the attacker is root.

- Strange hidden account : there is a ftpuser hidden account with a dumb password (nbv123). Secret backdoor? I don’t know, but anyway it is not serious at all and should have been revealed by any consistent audit.

- Shell design flaw: the VSH shell accepts a parameter (-a) that allow to spawn any command over the security roles normaly in place.

- You can also get a root shell by simply spawning ssh `/bin/bash` from the CLI.

To any serious security guy or unix administrator, these should look like amateurism. And what’s the hell are all the security audits for?

So concerning the Nexus 7000, it is obvious that at best it is not specifically designed to be secure, at worst it was simply as poorly designed (or released too quickly) as most stuff.

Conclusion

In conclusion, one thing we can tell for sure is that none of the virtualized networking solutions are designed to be secure. Of course, all these flaws are hopefully already or will be soon fixed. But, despite what Cisco may claim, the facts are here: there is no VDC miracle. The Nexus platform is certainly great, but not more bug-free, flaw-free than any other piece of code.

No virtualized architecture can give the same degree of protection than physical segregation.

In the case of Vswitches or Nexus 1000, the attack surface is just too high to use it for DMZ segregation if you are serious about security. The vulnerabilities are already here and it will be feasible for a skillful and motivated attacker to own your datacenter.

Concerning the Nexus 7000 and its VDC, the attack surface is considerably reduced because there is less code and fewer protocols at layer 2. However, it is undoubtly less secure than physical segregation. Any zero-day vulnerability would potentially expose the datacenter (and we all know that some zero-day sometimes take years before coming to the public, which is a lot of time for the criminals or the government agencies to exploit it). You can’t take it lightly when it comes to the whole datacenter integrity and it doesn’t make sense if you have expensive (in cash or in labor hours) security at upper layers.

But, of course, it may depend on what you have to protect. If your datacenter hosts sensitive data for your company’s buisiness, then you should think twice on how you deploy virtualization or use the cloud.

Don’t get me wrong. These technologies are great and very useful. In many areas, there are an improvement. Simply, they must be used with as much care as always. Concerning the DMZ topic, as far as I am concerned, I will not rely on virtualization and keep physical segregation between zones, supported by different devices from different makers.

One thing I keep an eye on, though, is the development of virtualized firewalls, IPS, etc. In a few years, if these technologies should became really mature (enforcing segregation on all OSI layers) and the hosting OS security should really improved, most of the concerns here would be addressed.