Context

I have kept playing with Docker recently, just for fun and to learn.

It is very powerful, but still young. It quickly shows some limit when it comes to security or persistence. There are some workarounds, yet more or less complex, more or less hacky.

Indeed, I had some issues with Etherpad, which is a Nodejs application, and its integration into Docker.

Initially, I made something quite simple, so my Dockerfile ended like that:

USER etherpad CMD ["node","/opt/etherpad-lite/node_modules/ep_etherpad-lite/node/server.js"]

Thus, I simply start the app with a low privileges user.

It worked, but I had two issues:

- Docker was not able to stop it nicely. Instead, it timed out after 10 sec and finally killed the app and the container altogether.

- No persistence of any kind, of course.

I decided to tackle these two issues to understand what was going on behind.

The PID 1 issue

I could not understand immediately the first issue: why was Docker unable to terminate the container properly?

After wandering a few hours on wrong paths (trying to get through with Nodejs nodemon or supervisor), I finally found some good articles, explaining that Docker misses an init system to catch signals, wich causes some issues with applications started with a PID = 1, which cannot be killed, or with Bash (the shell doesn’t handle transmitted signals.

I am not going to repeat poorly what has already been explained very well, so I encourage you to read this two excellent posts:

You will also find a lot of bug reports in the Docker github about this issue, and a lot of hacky or overkilling solutions.

In my opinion, the most elegant solution among them is to use a launcher program, very simple and dedicated to catch and handle signal.

I chose to use Dumb-init, as it is well packaged (there are plenty of options) and seems to be well maintained.

So, after installing Dump-init in the Dockerfile, the CMD line should now look like this:

USER etherpad CMD ["dumb-init","node","/opt/etherpad-lite/node_modules/ep_etherpad-lite/node/server.js"]

And indeed, as expected, docker stop now works flawlessly.

Volume permissions

This is where I had the toughest issue, although it is supposed to be straightforward with volumes.

Volumes enable to share files or folders between host and containers, or between containers solely. There are plenty of possibilities, nicely illustrated on this blog:

And it works very well…. as long as you application runs as root.

In my case, for instance, Etherpad runs with a low privileged user, which is highly recommended. At startup, it creates a sqlite database, etherpad.db, in its ./var folder.

Mounting a volume, of any kind, over the ./var folder, would result in a folder with root only permissions. Subsequently, of course, the launch of Etherpad from the CMD command would fail miserably.

Simple solutions like chown in the Dockerfile don’t work, because they apply before the mount. The mount occurs at runtime and works like a standard Linux mount: it is created by the docker daemon, with root permissions, over possibly existing data.

My solution was to completely change the way Etherpad is started. I now use an external script which is started at runtime:

- First, it applies the appropriate permissions to the mounted volume with chown,

- Then, it starts Etherpad with a low privileged user thanks to a su hack.

So now the Dockerfile ends with:

VOLUME /opt/etherpad-lite/var ADD run-docker.sh ./bin/ CMD ["./bin/run-docker.sh"]

And here is the script:

#!/bin/bash chown -R etherpad:etherpad /opt/etherpad-lite/var su etherpad -s /bin/bash -c "dumb-init node /opt/etherpad-lite/node_modules/ep_etherpad-lite/no de/server.js"

I use a data volume for persistency, so the run command looks like this:

docker run -d --name etherpad -p 80:9001 -v etherpad:/opt/etherpad-lite/var -t debian-etherpad

Far from being ideal, but it works. I really hope some features are coming to bring more options in this area, especially in the Dockerfile.

Some final thoughts

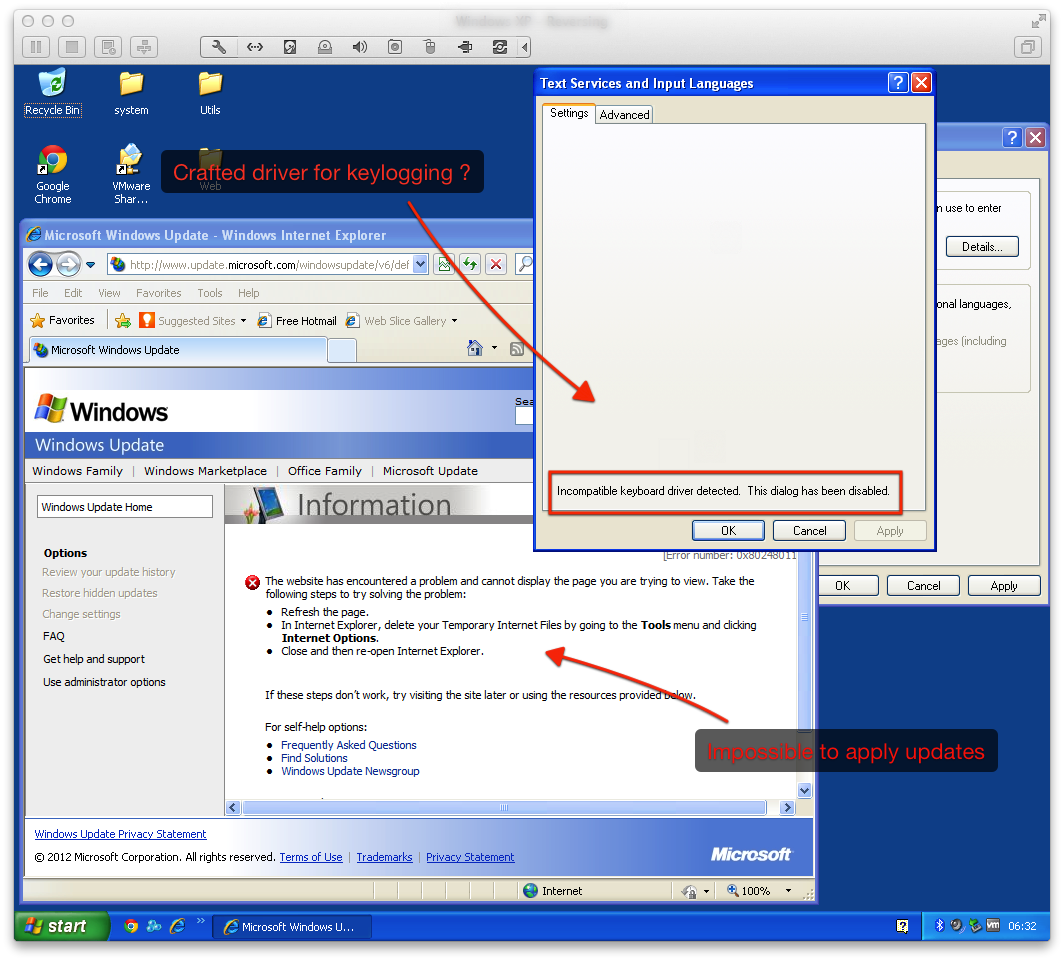

Globally, we can still hope a lot of improvements in security, because when I look at many Dockerfiles around, I see two behaviors:

- A lot of people don’t care and everything is happily running as root, from unauthenticated third-party images or binaries…

- Some people do care but end up with dirty hacks, because there is no other way to do so.

It is scary and so far from the Linux philosophy. Let’s wait for the enhancements to come.

You can find the complete updated Dockerfile on this github page.

While we are on this topic, have a look to this nice post with some nice tips and tricks for Docker.