FFFjacking is new web browser hacking technique discovered by Roman Kümmel (aka .cCuMiNn.).

Even though it requires a little of social engineering, it is quite dangerous. Yet another string to add to the bow.

FFFjacking is new web browser hacking technique discovered by Roman Kümmel (aka .cCuMiNn.).

Even though it requires a little of social engineering, it is quite dangerous. Yet another string to add to the bow.

It had been months since I had an weired issue with the embedded audio controller of my Gigabyte motherboard.

Plugin the headphones on the front panel of my box didn’t mute the output to the speakers, which nullified the purpose of having headphones.

I long thought that it was some hardware issue that I would have to sort some day by opening the box and checking the connections.

Yesterday, I decided to solve it for good and I started to google… and found out that it was a pure software issue!

The culprit sounded to be some unproper settings of the ALSA module and this Ubuntu guide just saved me.

I carefully followed the steps and it appeared that for my Gigabyte GA-790FXTA-UD5, it was necessary to this line:

add options snd-hda-intel model=3stack-hp

to

/etc/modprobe.d/alsa-base.conf

Ok, it did not go so smoothly as I picked out randomly models from the list until I find the right one (I had no idea of what was embbeded on my board). I hope this may help, as I have quite a lot of people with similar issues with all kinds of vendors.

I have recently worked on network virtualization. Many people, especially the network guys, have been recently excited with the VMware Vswitch or Cisco Nexus stuff. It is something that I understand because virtualization is cool. It brings many convenient features that truly make the life easier.

But what about the security? Convenience and security rarely come together, right? Oh, wait… we are in 2011, so lessons must have been learned. After all, Mr Salesman swear that it is more secure than ever. Convenience and security packed together, he says… it sounds promising. Let’s dig a little to find out what they won’t tell you…

I will focus on what really changes with virtualization : the architecture. One of the main goals of the technology is to reduce the number of physical devices to cut the costs, save space and energy. Of course, it goes with a simplification of the physical architecture. Therefore, some features previously handled by dedicated physical devices are now handled logically by a unique piece of hardware.

This obviously goes against the security best practices about designing network architectures with various degrees of exposure. But has the technology evolved so much that we should reconsider these recommendations?

These technologies are similar in the sense that they are designed to work directly inside the VMware platform. Vswitches are integrated with the solution of VMware, while Nexus benefits from the experience of Cisco and bring more layer 2 control (more settings, more protocols).

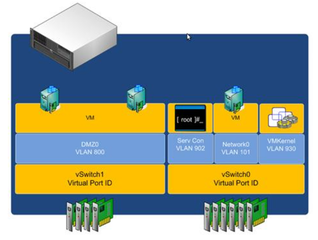

As well on the architecture documents of VMware as within the administration interface of Vcenter, it appears so easy to create segregated switches and build this way in a few clicks a DMZ architecture:

But it is slightly different in reality, as Brad Hedlund from Cisco shows in an interesting article: the vswitch illusion and DMZ virtualization. In short, whether you are using VMware Vswitches or Nexus 1000V, a single threaded program runs all the configured virtual switches. In clear, all the virtual switches share the same memory space. So, any vulnerability in the code would compromise all the switches, in other words: the entire network. And, not a surprise here, there have been many vulnerabilities. Just browse a CVE database if you want to check.

So you don’t want to rely on such a design for your datacenter, right?

In the case of the Nexus 7000, it is a little bit different because most of the switching work is handled by specific hardware, which have a much smaller attack surface than the vswitches stuff. But is it really safe?

The Nexus family is quite new and from what I could witness, they are quite pushy selling that. Because it is new, there is still neither much info surrounding the technologies used, nor user feedback, nor security research. Anyway, below is a quick sum-up of what I could find.

In a layer 3 Nexus architecture, Nexus 2000, 5000 and 7000 are designed to work together. Nexus 2000 are basically top-of-the-rack port panels, with no intelligence. Nexus 5000 takes care of most of the layer 2 switching, while Nexus 7000 adds layer 2 functionalities and layer 3 support. Nexus 2000 and 5000 can work without the 7000, but in that case there is not so much difference with a classic layer 2 switch in terms of security (but it has the advantage to be more flexible to integrate in a datacenter). This and this may help you to visualize the differences.

So we will focus on the Nexus 7000 architecture, which bring VDC as a way to handle DMZ architectures. VDC are somehow similar to VLANs. But whereas VLANs virtualized LANs on a switch, VDC virtualize switches. So, on the same Nexus 5000 device, VDC will add the capacity to have multiple virtual switches which are in theory properly isolated.

This is a very basic sum-up for what we are interested in, but if you want to learn more, I encourage you to read the Cisco whitepaper about VDCs.

Now that the presentations are made, the downside…

George Hedfors is the only researcher that worked notably on this platform, as far as I am aware. He made some really great findings, that you can discover within his slides.

At the time of his work – 2010, it appeared that the NX-OS consisted of a Linux Kernel 2.6.10 (released in 2004!). We can imagine that the OS has been signifiantly customized and hardened by Cisco. They may have include NX-bit support (included since 2.6.8 and later improved). However, there is probably no ALSR support (2.6.12), no MAC system (SELinux or Tomoyo). Of course, I may be wrong but I haven’t found any documentation about that and my Cisco contact did not provide me with any consistent detail.

Anyway, he found a bunch of design flaws:

To any serious security guy or unix administrator, these should look like amateurism. And what’s the hell are all the security audits for?

So concerning the Nexus 7000, it is obvious that at best it is not specifically designed to be secure, at worst it was simply as poorly designed (or released too quickly) as most stuff.

In conclusion, one thing we can tell for sure is that none of the virtualized networking solutions are designed to be secure. Of course, all these flaws are hopefully already or will be soon fixed. But, despite what Cisco may claim, the facts are here: there is no VDC miracle. The Nexus platform is certainly great, but not more bug-free, flaw-free than any other piece of code.

No virtualized architecture can give the same degree of protection than physical segregation.

In the case of Vswitches or Nexus 1000, the attack surface is just too high to use it for DMZ segregation if you are serious about security. The vulnerabilities are already here and it will be feasible for a skillful and motivated attacker to own your datacenter.

Concerning the Nexus 7000 and its VDC, the attack surface is considerably reduced because there is less code and fewer protocols at layer 2. However, it is undoubtly less secure than physical segregation. Any zero-day vulnerability would potentially expose the datacenter (and we all know that some zero-day sometimes take years before coming to the public, which is a lot of time for the criminals or the government agencies to exploit it). You can’t take it lightly when it comes to the whole datacenter integrity and it doesn’t make sense if you have expensive (in cash or in labor hours) security at upper layers.

But, of course, it may depend on what you have to protect. If your datacenter hosts sensitive data for your company’s buisiness, then you should think twice on how you deploy virtualization or use the cloud.

Don’t get me wrong. These technologies are great and very useful. In many areas, there are an improvement. Simply, they must be used with as much care as always. Concerning the DMZ topic, as far as I am concerned, I will not rely on virtualization and keep physical segregation between zones, supported by different devices from different makers.

One thing I keep an eye on, though, is the development of virtualized firewalls, IPS, etc. In a few years, if these technologies should became really mature (enforcing segregation on all OSI layers) and the hosting OS security should really improved, most of the concerns here would be addressed.

We all know that passwords sucks, that they are the nightmare of all administrators and security guys. So many hacks have been eased because the victims reused the same password everywhere : email account, forum, bank, critical systems…

Sadly, so far, there is even not the beginning of a replacement solution. Passwords will be there for long, so we would better use them accordingly.

Yes, I am aware of many on-line services like FisrtPass, KeePass, 1stPassword, etc. However, I don’t feel comfortable with having all my password somewhere on-line, even if they claim – and I believe they are sincere, that they use strong encryption and can’t access to it.

Instead, I use a combination of the Firefox password manager and the Pwgen add-on. I use this add-on to quickly and conveniently generate a random password when I subscribe to a web service. When Firefox prompts for it, I just choose to remember the password automatically. SSO quick and dirty.

For the other passwords that I can’t and don’t need to memorize, I store them in a local encrypted file.

To edit the file, I simply use Vim with this nice GPG plugin:

$ gpg --gen-key

$ gpg --encrypt --recipient 'your name' passwords $ rm passwords

$ vim passwords.gpg

If you don’t like the overhead of GPG, a more straightforward solution is to use the OpenSSL extension :

$ openssl aes-256-cbc -in passwords -out passwords.aes

$ vim passwords.aes

I believe that, if not perfect, it is pretty secure. I mean not more, not less than your system is. Anyway I don’t have any need for an on-line manager. And you, how do you manage your passwords? Let us know about your tips.

There are many urban legends in the industry. I did believe in one of them : “wiping a disk to properly prevent data restore requires random writes and several passes”.

At least until I found this very instructive article, “Disk Wiping – One pass is enough“. Don’t miss the second part which clarifies some points and gives more details.

In short, after one pass, every bit of the disk is filled with zero and there is simply no way to find out what the previous value was. Even the best tools out there have no clue to do it.

Then, there is a theory of physically restoring each bit using a magnetic force microscope. It has always came with a high error rate, and with modern high density disks it is even less reliable. Now, considering any real world data length, errors occurring on the restored bits would make it impossible to rebuild any usable data. There is obviously no chance for such a technique to recover a file.

So, in the future, I will not only save time doing one pass, but I will replace :

$ dd if=/dev/urandom of=/dev/sda

with

$ dd if=/dev/zero of=/dev/sda

Note that formating just reset the partition table. In no way it clears out every bit of the disk.

The “cloud” is a buzz word that has been around for months. The marketing guys are pushing it so hard that every IT guy will hear of that at work soon or later.

Taking a decision whether to use it or not requires some deep knowledge, because if its pros are clear – you can count on the salesmen to get a great picture of it again and again, its cons are silenced.

Too bad, a major disadvantage is security. But guess what? The other day an “analyst” presenting his study about cloud computing just cleared out the issue in 3 words :

“Concerning the people who doubt of the security in the cloud, it is a typical psychological issue of theses persons fearing change or something new . There is really nothing concrete to worry about cloud security.”

Well, not sure I am going to see a psychologist. Of course the guy did not give any solid argument, so here we go.

In short, cloud computing expose to the Internet services that were, in normal conditions, always kept inside an internal network and behind peripheral protections.

Of course, these services offer authentication, but basically almost every traditional web attacks will work as usual. After all, we are talking about the same web portal, the same users, the same browsers, etc.

Let quickly summarize the potential threats: CSRF, XSS, phishing, SSL attacks (MiTM, certificate spoofing), browser exploits and many more.

So really, it is not a question of being crazy, paranoid or reluctant to change. There are just many issues that don’t make the cloud useless but should incite to caution.

Cloud computing can be used for what it is good at (flexibility, convenience) but not to replace a datacenter. It should not be used if security is a concern.

Don’t listen to the salesman only, read what some specialists are saying. Here is a compilation of some interesting articles I found :

And last but not least, in case our favorite salesman keeps pushy:

But that’s not all. The same goes with “virtualization everywhere”, but that will be another topic…